In today’s digital landscape, platform owners face a constant battle against malicious actors seeking to exploit systems through spam, fraud, and abuse.

Every successful online platform eventually reaches a tipping point where growth attracts not only legitimate users but also spammers, fraudsters, and bad actors. This reality presents platform operators with a challenging dilemma: how to maintain an open, accessible user experience while protecting the integrity of their ecosystem. The answer often lies in strategically introducing friction—deliberate obstacles that slow down or prevent abusive behavior without alienating genuine users.

Understanding when and how to implement these protective measures can mean the difference between a thriving community and a platform overrun with spam and abuse. This comprehensive guide explores the critical decision points, implementation strategies, and best practices for introducing friction to safeguard your platform.

🎯 Understanding the Balance Between Openness and Protection

The fundamental challenge facing platform designers is maintaining equilibrium between accessibility and security. Too little friction creates an environment where abusers operate freely, degrading the experience for legitimate users. Too much friction frustrates genuine users, potentially driving them away before they experience your platform’s value.

Research consistently shows that every additional step in a signup or action process reduces conversion rates. Yet platforms without adequate safeguards eventually suffer even greater user attrition as spam and abuse make the service unusable. The key is identifying the optimal friction points that maximize protection while minimizing impact on legitimate users.

Consider Twitter’s early years when account creation required only an email address. This low-friction approach fueled rapid growth but also enabled massive bot networks. As the platform matured, additional verification steps became necessary—phone number verification, CAPTCHA challenges, and behavioral analysis. Each friction point was carefully calibrated to target abusive patterns while maintaining accessibility for real users.

Recognizing the Warning Signs: When Your Platform Needs More Friction

Knowing when to introduce friction requires vigilant monitoring of platform health indicators. Several red flags signal that protective measures have become necessary.

Unusual Growth Patterns and Velocity

Sudden spikes in account creation, especially during off-peak hours or from specific geographic regions, often indicate automated abuse. While viral growth can produce legitimate spikes, these typically follow predictable patterns aligned with content sharing and social proof. Suspicious growth shows irregular patterns, including accounts created in rapid succession from similar IP addresses or with sequential email patterns.

Content Quality Degradation

When spam content begins appearing regularly in user feeds, search results, or communication channels, friction becomes essential. Early signs include repetitive messages, excessive links to external sites, or content that seems disconnected from your platform’s purpose. User reports and complaints about spam provide valuable qualitative data that quantitative metrics might miss initially.

Increased Support Burden

A rising volume of support tickets related to spam, scams, or abusive interactions indicates that automated defenses are insufficient. When your team spends increasing time manually reviewing reports and removing bad actors, systemic friction mechanisms become more cost-effective than reactive moderation.

Resource Exhaustion and System Strain

Abusive behavior often manifests as unusual load on infrastructure—excessive API calls, database queries, or bandwidth consumption that doesn’t correlate with legitimate usage patterns. These technical indicators may precede visible content problems, providing early warning that friction mechanisms are needed.

Strategic Friction Points: Where to Implement Protective Measures

Not all friction is created equal. The most effective protective measures target specific abuse vectors while creating minimal impediments for legitimate users.

Account Creation and Onboarding 🔐

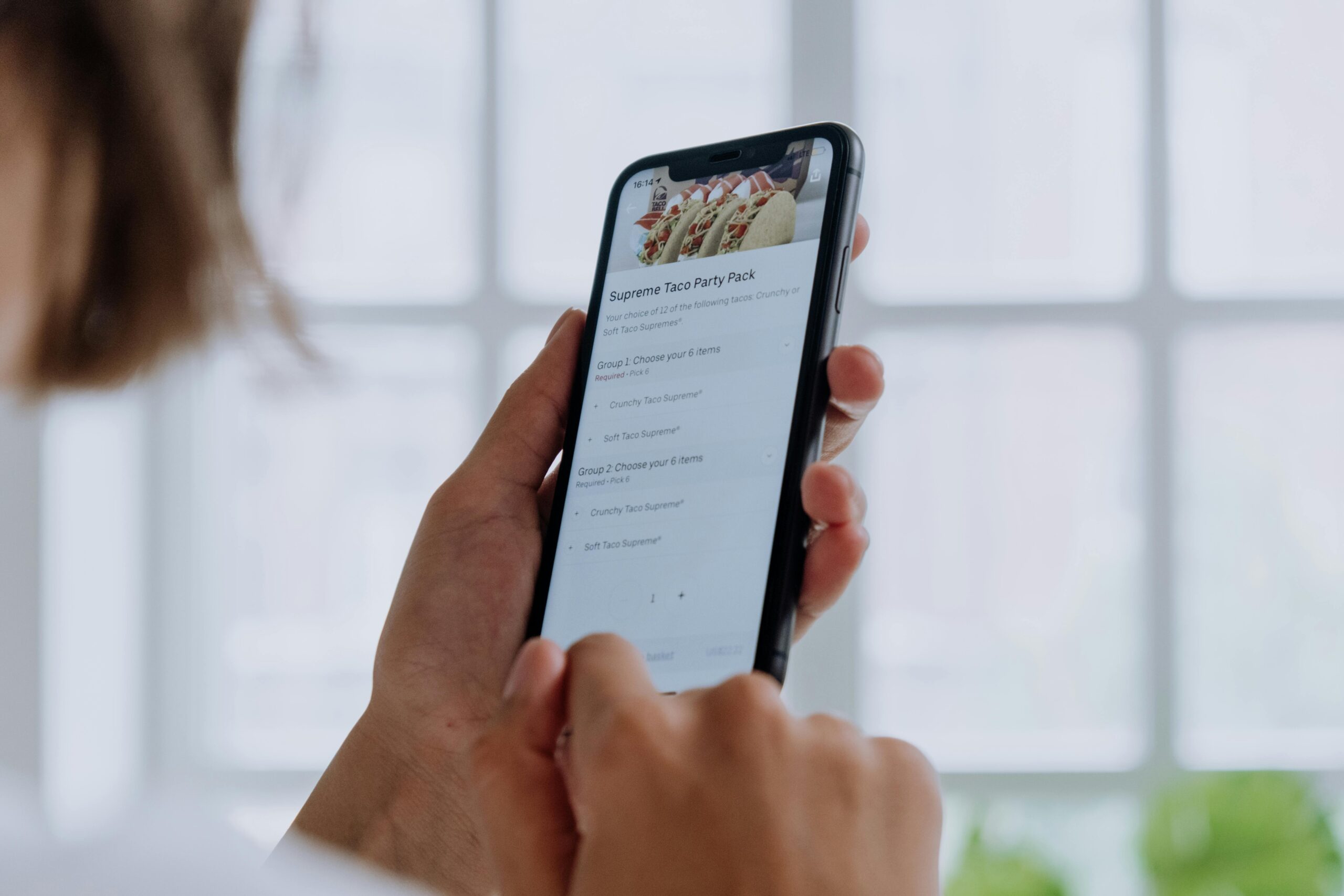

The registration process offers the first opportunity to filter bad actors. Progressive friction works well here—starting with minimal requirements and adding verification steps based on risk signals. Low-risk users might complete registration with just email verification, while suspicious patterns trigger additional requirements like phone verification or identity confirmation.

Email verification remains foundational but is easily circumvented with disposable addresses. Phone number verification provides stronger identity binding, though it creates accessibility concerns for users without phones or those protective of privacy. CAPTCHA challenges add friction specifically for automated systems, though sophisticated bots increasingly defeat traditional implementations.

Content Publication and Sharing

Once users are onboarded, protecting content channels requires different friction strategies. Rate limiting prevents spam floods by restricting how frequently users can post, comment, or message. New accounts might face stricter limits that gradually relax as users demonstrate legitimate behavior.

Content filters analyzing text, images, and links can automatically block or flag suspicious material. However, purely automated filtering risks false positives that frustrate users. A hybrid approach where machine learning flags suspicious content for review balances efficiency with accuracy.

Requiring account age or engagement thresholds before unlocking certain features effectively segments new users from established community members. Spammers typically want immediate access to blast content, so time-based restrictions naturally filter many abusive accounts.

Financial Transactions and Monetization

Platforms involving payments, virtual goods, or monetization face particular fraud risks. Multi-factor authentication for financial actions provides essential security without significantly impacting user experience for legitimate transactions. Payment verification, holding periods for new accounts, and transaction limits help prevent fraudulent purchases and money laundering.

Implementation Strategies That Minimize User Impact

How you implement friction matters as much as where you implement it. Thoughtful execution distinguishes protective measures from user-hostile obstacles.

Progressive and Risk-Based Friction

Rather than treating all users identically, adaptive systems apply friction proportional to risk. Machine learning models score behaviors in real-time, triggering additional verification only when suspicious patterns emerge. Established users with positive histories bypass friction that new or suspicious accounts encounter.

This approach maximizes protection while minimizing false positives. A user accessing your platform from their usual device and location might face no friction, while the same account accessed from a new country triggers additional authentication.

Transparent Communication

Users tolerate friction better when they understand its purpose. Clear messaging explaining why verification is needed—”We’re protecting your account from unauthorized access”—transforms friction from frustration into security assurance. Avoid vague error messages that leave users confused about what they did wrong or how to proceed.

Friction as Education

Onboarding friction can simultaneously protect platforms and educate users. Requiring users to review community guidelines before posting establishes behavioral expectations. Asking new users to complete profile information provides both verification data and helps them engage more effectively with your platform.

Common Friction Mechanisms and Their Trade-offs ⚖️

Different friction types offer varying effectiveness against specific threats while impacting user experience differently.

| Friction Type | Effectiveness Against Bots | Effectiveness Against Human Abuse | User Experience Impact |

|---|---|---|---|

| Email Verification | Low | Low | Minimal |

| Phone Verification | High | Medium | Moderate |

| CAPTCHA | Medium-High | None | Moderate |

| Rate Limiting | High | Medium | Low for normal use |

| Account Age Requirements | High | Medium | High for new users |

| Behavioral Analysis | High | High | Low for legitimate users |

Email Verification

Email confirmation remains universal despite limited effectiveness because it creates virtually no friction for legitimate users while adding minimal cost. It filters only the laziest abusers but serves as a foundation for communication and account recovery.

Phone Number Verification

SMS verification significantly raises the bar for abuse by requiring a resource (phone numbers) that’s harder to acquire in bulk than email addresses. However, it creates legitimate accessibility and privacy concerns. Some users lack phones, others use prepaid services that don’t receive automated messages, and privacy-conscious users resist providing phone numbers.

CAPTCHA and Challenge-Response Systems

Traditional text-based CAPTCHAs have become ineffective as computer vision improved. Modern alternatives like reCAPTCHA analyze user interaction patterns, often solving in the background without explicit challenges. However, these systems raise privacy concerns through tracking and create accessibility issues for users with disabilities.

Behavioral Biometrics and Machine Learning

Advanced platforms analyze typing patterns, mouse movements, navigation flows, and engagement patterns to distinguish humans from bots. These invisible friction mechanisms provide strong protection without degrading user experience. However, they require significant technical sophistication and ongoing refinement as abusers adapt.

Measuring Friction Effectiveness Without Killing Growth 📊

Implementing friction without measuring its impact creates risk of over-correcting and damaging growth. Robust analytics must track both protection effectiveness and user impact.

Protection Metrics

Track the rate of spam content, abusive accounts, and fraudulent transactions before and after implementing friction. Monitor how quickly bad actors are detected and removed. Measure the recurrence rate—are banned abusers successfully creating new accounts, or does friction prevent their return?

User Impact Metrics

Conversion rates at each friction point reveal where legitimate users abandon the process. Completion time for verification steps indicates user frustration levels. Support ticket volume related to friction mechanisms shows where users struggle. User surveys and feedback provide qualitative context that numbers alone miss.

A/B Testing Friction Implementation

When possible, test friction mechanisms with controlled populations before platform-wide rollout. Compare conversion rates, engagement metrics, and abuse rates between test and control groups. This data-driven approach prevents harmful changes while validating protective measures.

Advanced Techniques for Sophisticated Platforms

As platforms mature and abusers become more sophisticated, basic friction mechanisms prove insufficient. Advanced techniques provide layered defense.

Reputation Systems and Trust Scores

Assigning reputation scores based on historical behavior allows dynamic friction adjustment. New users start with neutral scores and gain trust through positive actions—completing profiles, receiving positive interactions, avoiding reported content. Low-trust users face additional scrutiny while high-trust users enjoy streamlined experiences.

Social Verification and Network Effects

Leveraging existing user relationships provides powerful verification. Requiring new users to connect with established members, or validating identities through social network connections, makes large-scale abuse harder. Invitations from trusted users can bypass certain friction for their invitees while making the inviter accountable.

Economic Friction

Small financial commitments—deposits, minimal fees, or bond requirements—effectively deter abuse by making it economically unfeasible at scale. This works particularly well for B2B platforms where legitimate users readily accept nominal costs that scale prohibitively for abusers.

The Ethics and Accessibility of Platform Friction 🤝

Platform protection must balance security with inclusivity. Friction mechanisms that exclude legitimate users from marginalized communities create ethical problems beyond business concerns.

Phone verification disproportionately affects users in developing regions where mobile service is less accessible. Government ID requirements exclude users without documentation, including homeless individuals, refugees, and those fleeing abuse. CAPTCHA systems create barriers for users with visual impairments or cognitive disabilities.

Responsible platforms consider these impacts and provide alternative verification paths. Manual review processes, community vouching systems, or graduated access that relaxes restrictions over time can accommodate users who can’t complete standard verification while maintaining overall platform integrity.

Adapting Friction as Your Platform Evolves

Friction requirements change as platforms mature and threat landscapes evolve. What works for a small community fails at scale, while measures necessary for large platforms would strangle early growth.

Early-stage platforms often prioritize growth, accepting higher abuse risk to minimize signup friction. As the user base expands and the platform gains value, both the incentive for abuse and the resources available for defense increase. Mature platforms typically implement layered friction systems that would have killed growth if introduced prematurely.

Regular review of friction mechanisms ensures they remain effective and appropriate. Abusers constantly develop new techniques to circumvent protections, requiring ongoing refinement. User feedback reveals where friction has become unnecessarily burdensome, allowing optimization that improves both security and experience.

Building a Culture of Platform Health

Technical friction mechanisms work best within a broader platform health strategy. Community guidelines, user education, transparent moderation, and responsive support create an environment where abuse is culturally unacceptable, not just technically difficult.

Empowering users to report abuse, providing clear escalation paths, and demonstrating that reports lead to action enlists your community as partners in platform protection. When users see that friction mechanisms protect their experience rather than just serving abstract platform interests, they tolerate necessary inconveniences more readily.

Platform teams must communicate openly about abuse challenges and protective measures. Transparency about what friction exists, why it’s necessary, and how it evolves builds trust. Users who understand they’re protected partners rather than potential threats accept verification requirements more positively.

Future-Proofing Your Friction Strategy 🔮

Emerging technologies and evolving user expectations will continue reshaping how platforms balance accessibility and protection. Decentralized identity systems may provide verification without centralized data collection. Biometric authentication could make account security seamless while preventing credential sharing. Advanced AI might distinguish human behavior from bots with minimal visible friction.

Privacy regulations increasingly constrain data collection that powers behavioral analysis and reputation systems. Platforms must develop friction mechanisms that protect without excessive surveillance. Techniques like differential privacy and on-device processing may allow behavioral analysis while respecting user privacy.

The fundamental challenge remains constant: protecting platform value and user experience from those who would exploit openness for profit or harm. Strategic friction, thoughtfully implemented and continuously refined, provides the essential tool for maintaining this balance as your platform grows and evolves.

Success requires viewing friction not as a necessary evil but as a carefully crafted component of user experience—one that protects the community while welcoming legitimate members. When implemented with attention to user impact, accessibility, and continuous improvement, friction becomes an invisible guardian that enables rather than restricts platform growth.

Toni Santos is a user experience designer and ethical interaction strategist specializing in friction-aware UX patterns, motivation alignment systems, non-manipulative nudges, and transparency-first design. Through an interdisciplinary and human-centered lens, Toni investigates how digital products can respect user autonomy while guiding meaningful action — across interfaces, behaviors, and choice architectures. His work is grounded in a fascination with interfaces not only as visual systems, but as carriers of intent and influence. From friction-aware interaction models to ethical nudging and transparent design systems, Toni uncovers the strategic and ethical tools through which designers can build trust and align user motivation without manipulation. With a background in behavioral design and interaction ethics, Toni blends usability research with value-driven frameworks to reveal how interfaces can honor user agency, support informed decisions, and build authentic engagement. As the creative mind behind melxarion, Toni curates design patterns, ethical interaction studies, and transparency frameworks that restore the balance between business goals, user needs, and respect for autonomy. His work is a tribute to: The intentional design of Friction-Aware UX Patterns The respectful shaping of Motivation Alignment Systems The ethical application of Non-Manipulative Nudges The honest communication of Transparency-First Design Principles Whether you're a product designer, behavioral strategist, or curious builder of ethical digital experiences, Toni invites you to explore the principled foundations of user-centered design — one pattern, one choice, one honest interaction at a time.